¶ eBook Formats

::: center

Decentralizing Electricity Production

:::

::: titlepage

Decentralizing Electricity Production

Howard J. Brown, Tom R. Strumolo

Yale University Press | New Haven

ISBN: 978-0300025699

ISBN-10: 300025696

Updated: 2024-11-07

:::

::: flushleft

Published with assistance from the Kingsley Trust Association Publication Fund established by the Scroll and Key Society of Yale College.

Copyright © 1983 by Yale University.

All rights reserved.

This book may not be reproduced, in whole or in part, in any form (beyond that copying permitted by Sections 107 and 108 of the U.S. Copyright Law and except by reviewers for the public press), without written permission from the publishers.

Designed by James J. Johnson and set in Melior Roman type by P & M Typesetting, Inc.

Printed in the United States of America by Halliday Lithograph, West Hanover, Massachusetts.

Library of Congress Cataloging in Publication Data Main entry under title:

Decentralizing electricity production.

Includes index.

I. Electric power productionAddresses, essays, lectures. I. Brown, Howard J., 1945-

II. Strumolo, Tom Richard, 1952- TK1005.D38 1983 363.6 83-3677

ISBN 0-300-02569-6

Copyright

All rights reserved. No part of this book may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage and retrieval system, without permission in writing from the Copyright Holder.

ENCODED IN THE UNITED STATES OF AMERICA

:::

¶ Dedication

To R, Buckminster Fuller, whose ideas, lifetime work, and belief in individual initiative provide the inspiration to “dare to be naive.”

¶ Preface and Acknowledgements

This is a book about the future, yet it includes no forecasts or predictions. Our motivation to prepare the book did not result from a curiosity about what is likely to happen but from an exploration of what could happen. As planners involved in energy issues daily, we began with the belief that energy the way we produce, distribute, and use itis at the heart of every social, economic, and environmental problem confronting society; and that energy policies and decisions based on projections and forecasts derived from recent trends can only encourage more of what we are already doing. It seems self-evident that if there is one thing we do not need, it is more of what we already have.

Fossil and nuclear fuel shortages, health and safety problems, inflationary pressures, environmental impacts, and financial problems are threatening the viability of our electrical energy system. Many people who are involved with these issues have come to feel that the problems inherent in the way society produces and distributes electricity are so serious that major changes are in order. But it is the responsibility of citizens who disagree with the status quo to propose alternatives, and that is what this book is about. Nevertheless, we do not presume that it contains answers, only possibilities.

In the numerous debates over nuclear power and over various specific alternative technologies, much of the attention given to alternatives focuses on forecasts of likely penetration into the market based on past trends. The most general question we address in this work is, “If we were to create a decentralized electrical energy system, how would it work?” As planners we concerned ourselves with only two general constraints: what is technologically possible (using existing knowledge and tools) and what is ecologically possible (considering existing conditions). Economic viability is something society creates after deciding what it wants, not something that should determine its needs. Making something economical is a policy problem. The question we need to ask is, “How do we do it?”not “Should we?”

This outline of alternative methods for producing and distributing electricity clearly raises more questions than it answers, but we think it should be useful to anyone wanting to understand the options open to society. We hope it will serve as the basis for more research, for more thoughtful and meaningful discussion of plans by public and private agencies, and for the establishment of clearer social goals.

Our exploration of the question of alternatives for electrical energy generation began in 1972 at Earth Metabolic Design, Inc. (EMD), a nonprofit research organization concerned with prudent resource planning. At that time Medard Gabel, EMD’s director, organized a project to assess the potential of using renewable energy sources on a global scale. The project is described in his book Energy, Earth and Everyone. Numerous subsequent research and planning projects conducted by private and government agencies provided opportunities for expanding our thoughts on the topic. Thus, this book is largely the result of ten years of research in alternative energy systems and of a conference entitled, “The Problems and Potentials of Decentralizing Electrical Production,” held at Wesleyan University (Middletown, Connecticut) in April 1978. The one-day symposium was sponsored by the College of Science in Society at Wesleyan, and by EMD, Northeast Utilities, the Connecticut State Energy Office, and the New England Regional Office of the Department of Energy. Some of the speakers have contributed articles to this book.

The conference literature set forth the following questions to be addressed:

Can significant amounts of electricity be produced from small-scale renewable sources such as wind, photovoltaics, cogeneration, and hydroelectric? How much?

Have these systems proved themselves? Are they reliable?

Can they compete economically with fossil and nuclear fuels?

What are appropriate scales of production?

What kinds of institutional changes would be required?

What kinds of technological changes would be required?

Can electricity be produced in competitive markets by small producers?

Can continuous supply be assured? Can quality be assured?

How would the system be managed? What role would utilities play?

Would decentralized systems save money? Would they pollute more or less?

How would electrical power be stored?

Since the conference we have added some more critical questions:

What is “appropriate” technology regarding electrical generation?

To what extent can wind supply this country with electricity? Hydroelectric power? Cogeneration? Photovoltaics? Solid waste?

Can other sources such as hydrogen and fuel cells supply a sufficient amount of electricity?

How could a diversified grid be decentralized, integrated, and managed?

How can past experience guide us?

Are there diseconomies of scale of electrical production?

What impacts on employment would a decentralized system have?

How can such a system be implemented?

We have taken special care to present a comprehensive view of the argument by dealing with as many aspects as possiblepolitical, economic, legal, environmental, and technical. The conception of an alternative structure for the electrical energy industry that emerges from this volume represents our own views, which are not necessarily those of the individual authors whose work is included. In our own research over the years we evolved the overall thesis of the book and identified the areas of research and expertise that would be required to establish the concept’s feasibility. We invited each of the authors because we felt he or she was making a creative contribution in those fields that are important to the future of electrical energy systems. However, each of the authors, if given the responsibility of editing this volume, might draw a very different set of conclusions from the material presented.

The first three articles, comprising Part I, form the philosophical framework for the rest of the book and establish an argument for decentralization. Part II explores how decentralized production in a utility grid could be managed and provided. It contains articles on the resources and technologies of production; the technologies for grid integration and storage; the economics, management (including public policy), and engineering; and the role of the marketplace. Part III includes articles on a range of political, practical, ideological, and economic issues relating to decentralization.

We want to express our appreciation to Constance Ettridge, Gunhild Gross, Daniel Bob, and James Dray for their patient and tireless help. Without their assistance this project would never have been completed.

Howard J. Brown

Tom Richard Strumolo

¶ The Context for Reform

¶ Problems, Planning, and Possibilities for the Electric Utility Industry

HOWARD J. BROWN

PROBLEMS: A MARGINAL UTILITY

Electric utilities have problems that can be understood only in the larger social and ecological context. More and more of a rapidly growing world population wants access to the resources needed to create prosperity. Yet the resources on which we have become dependent are finite. Given present patterns of using these resources, there simply are not enough to ensure both prosperity and equality; and the biosphere cannot absorb all the by-products of the endless expansion needed to meet global needs. Simultaneous inflationary and recessionary pressures (and the threat of supply disruptions) are among the early signs of stress resulting from this predicament.

Decreasing net productivity from dependence on traditional fuels and political maneuvering for control of remaining reserves both contribute to the problem. Basic supply and demand economics is based on the assumption that as resources become scarce, rising prices from the supply/demand imbalance will pay for finding more. But the concept of net energy availability illustrates the problem with that assumption: the closer we come to the end of our fuel reserves, the more energy it takes to get each unit into usable form.

This logic was described by physicist Gerald Feinberg in his now prophetic 1969 book, The Prometheus Project. In the early developmental stages of the American commercial nuclear fission industry, economists recognized the finite supplies of concentrated nuclear fuels in the earth. Since they understood that uranium exists in dispersed form in large quantities (even in common granite), they assumed that as concentrated resources ran out, higher prices would pay for mining less concentrated reserves. Feinberg pointed out what economists did not recognize, that it may take more energy to get that dispersed uranium than could be extracted from it once mined.

Ecologist Howard Odum used the net-energy concept to describe the problem of our dependence on petroleum. Each new barrel of oil is harder to find, deeper in the ground (harder to mine), and lower in quality (harder to refine). Because of this, Odum argued, an inherent relationship exists between dependence on fossil fuels and inflation. As we get closer to the end of concentrated reserves, we find that each new barrel yields less useful net energy; thus, we spend more and more of each dollar on getting energy and less for the desired product. Long before we actually run out of fuel, we may come to the point where we are spending more energy than we are producing. And the theory can be extended: long before we reach the point of negative net productivity, we may reach the point where there may not be enough traditional fuels to build alternative technologies and maintain the fabric of society at the same timeat least, given present productivity.

The inflationary impact of continuing dependence on diminishing resources is amplified by the ever-increasing demand for capital. As Lovins points out in chap. 2, the capital required for finding, extracting, preparing, and safely converting fuels from a shrinking resource base drains the rest of the economy of finite capital. In the process, the development of alternatives is retarded. Thus we are already feeling, and will increasingly feel, the economic impact of dependence on disappearing fossil fuelsit is not just a problem for the future. Ecological limits are now impinging on our economic system; and without structural reform, institutions have difficulty adapting. Political and economic turmoil over the control of petroleum, uranium, and other nonrenewable resources is a logical result of diminishing supplies.

Given the new ecological realities, accepted policy levers are simply not effective. For example, levers traditionally used to combat recession (i.e., stimulate economic growth) only stimulate inflation by increasing demand and competition for finite, harder to get, energy-expensive resources. When prices rise but the net availability of resources needed to meet demand actually declines, both recession and inflation accelerate. Prices rise but production and consumption do not rise proportionately. Conversely, levers used to combat inflation by slowing down the economy also accelerate inflation and recession. When the economy slows down, production declines and more people lose work, but prices do not come down because the energy problem remains. In fact, because capital investment in scarce fuels only rises as a share of total economic activity, prices only rise further. Inflation and recession can be interpreted as two sides of the same coin feedback from a finite biosphere telling us that present patterns of expansion cannot continue indefinitely. For the first time, argues economic activist Hazel Henderson in her book Creating Alternative Futures (New York: Berkley, 1978), our economy is being driven and directed primarily by ecological forces rather than by policymakers.

The perspective of a finite world, however, does not lead irrevocably to the assumption that there are limits to progress and wealth. Evidence is accumulating that with proper management, available resources may well prove adequate for long-term prosperity and equality. It is apparent, however, that if improperly managed, our resources may become scarce, leading to further economic, political, and ecological deterioration. Thus, society’s concern over access to resources is caused not by their scarcity or by ecological limitations but by the way we think about ourselves and our problems and the way we manage (or fail to plan and manage) the resources available.

Planning and management decisions are based on assumptions about wealth, human behavior, and the environment. When these ideological and economic assumptions inaccurately describe our place in the world (i.e., the desirability of undirected economic growth), they exacerbate the problems.

There seem to be universal identifiable stages in the way systems relate to their environment. For example, Howard Odum points out that “during times when there are opportunities to expand energy inflows, the survival premium by Lotka’s principle is on rapid growth, even though there may be waste.”1 This condition describes the period of rapid growth evident both in the early development of ecosystems and in the growth of Western industrial economies to the present. These periods of rapid growth, Odum maintains, require the expansion of resource availability.

We observe dog-eat-dog growth competition every time a new vegetation colonizes a bare field in rapid expansion to cover the available energy receiving surfaces. The early growth ecosystems put out weeds of poor structure and quality, which are wasteful in their energy-capturing efficiencies, but effective in getting growth even though the structures are not long lasting.

Most recently, modern communities of man have experienced two hundred years of colonizing growth, expanding to new energy sources such as fossil fuels, new agricultural lands, and other special energy sources. Western culture and more recently, Eastern and Third World cultures, are locked into a mode of belief in growth as necessary to survival. “Grow or perish” is what Lotka’s principle requires but only during periods when there are energy sources that are not yet tapped.

Odum points out that during times when energy sources have been depleted and new sources cannot be found, Lotka’s principle requires “that those systems win that do not attempt fruitless growth but instead use all available energies in long-staying, high-diversity, steady-state works.” In this condition, which prevails when supplies of critical resources are dwindling, survival is dependent on the type of resources we use and how well we use them rather than on how much or how many we use.

The explosion of interest in biology and ecology during recent decades has dramatically improved science’s understanding of how living systems behave and adapt successfully under differing conditions. Yet we have not effectively integrated this knowledge into the study, planning, and management of our social and technological systems. Nowhere is the gulf between new realities and old perceptions more evident than in our electrical energy production and distribution system.

The electric utility industry of the United States can be characterized as a vertically integrated industry composed mostly of publicly regulated and protected regional monopolies. These institutions are assigned the right and responsibility to produce and distribute sufficient, reliable, and high quality electricity to meet consumer demand in the most economical fashion. Many electrical utilities are finding it difficult to meet these requirements and remain solvent.

Not long ago private electric utilities represented ideal security for investors, but in recent years utilities have become questionable investments in many parts of the country. Private utilities, like other corporations, must maintain or increase their income to remain attractive investments to stockholders, and there are three primary ways in which they can accomplish this: sell more electricity, raise prices, or reduce production costs. Each of these options is discussed below.

Historically, increasing sales has been the simplest way for utilities to raise revenues. In periods of widely available energy resources (with which to generate electricity) and rapidly expanding economic activity, utilities fit neatly into the Keynesian economic model. The best way to stimulate growth was to build new generating capacity, thereby increasing the availability of low-cost electricity to sell; that low-cost electricity, in turn, helped attract new growth which would consume more electricity, raising revenues and permitting further construction. This cycle of growth made the interests of the utilities seem allied directly with the general interests of the people of a region, state, or country because of the accepted Keynesian assumption that new business activity means more jobs, more spendable income, further business activity, and so on. Until recently, limits to this cycle of growth were not considered by utility planners or state and federal regulators. States began and continue to allow utilities to reflect capital investment in rate structures, thus encouraging them to expand. This rate-base accommodation in conjunction with the long-term federal tax advantages of increased capital investment made expansion seem very logical.

Thus, under advantageous economic conditions, it has been widely assumed that a mutually supportive relationship exists between the availability of electricity and economic growth. Utilities borrow money from private investors to build new generation capability, and generation capability in turn stimulates the economy to such a degree that consumption increases and revenues from new customers are sufficient to pay off the loans.

A new cycle has set in, however, in which costs of capital and fuel are rising while the growth of both the population and the economy is slowing down. Therefore, in many parts of the country, utilities are finding themselves in the position of having to pay off past loans for current overcapacity without experiencing a concomitant increase in new sales. As prices of electricity rise, elasticity becomes an increasing factor as customers begin to reduce consumption by conserving, switching to other energy forms, and even producing their own. Thus, increasing sales will be less and less of an option for generating the income to pay off loans and increase future sales, and limited cash flow will become an increasingly serious problem.

Another way that utilities, like other businesses, can raise revenues is by increasing the price of the commodity produced. Since most utilities are monopolies, they are subject to the regulatory control of local, state, and federal agencies; but until recently utilities have had little difficulty in gaining the approval of public utility commissions and other regulatory agencies for rate hikes. Because the cost of producing electricity has been very low in relation to the society’s overall standard of living, because electricity is a relatively clean and flexible form of energy, and because it is essential for many end uses, price elasticity has been low and price increases have not significantly affected demand.

If profits from increasing sales are not available to utilities as new sources of income, neither are increasing rates because the inflationary pressures that have brought about the spreading consumer movement have placed new pressures on public utility commissions to scrutinize closely utility requests for rate increases and new facilities. Lower profits mean higher interest rates and further rising costs.

The last mechanism for increasing income is to reduce costs by, for example, producing energy from newer, cheaper sources, improving management techniques, and improving the efficiency of transmission. To understand the seriousness of the dilemma confronting the utilities, we must reexamine, in the light of new circumstances, the three mechanisms utilities have historically used to increase profits.

Increasing fuel and capital costs, environmental and safety concerns, as well as general inflation are driving up the cost of electrical generation. Reducing costs is simply not an option available to public utilities, whose mandate is to provide reliable electric power and who come under severe criticism from the public and from decision makers when reliability is sacrificed. Costs have remained as low as they have in the nuclear and fossil fuel-based utility industry because of the large degree to which total costs have been externalizedborne by government or (in the case of nuclear) simply left for future generations. But increasing demands for institutional responsibility are likely to force increased internalization of costs now externalized, causing further major increases in costs for utilities and a further erosion of sales potential.

As conservation is accomplished, the high fixed costs from nuclear investments mean that unit costs for consumers rise further, encouraging more conservation and small-scale alternatives. Thus the utilities, fulfilling their social mandate to produce more electricity, are caught in the economic dilemma. Many have made enormous commitments to new nuclear power plants, borrowing at ever higher interest rates to pay the spiraling capital costs of new nuclear plants that will produce electricity to stimulate economic growth (and eventually demand) that is simply not likely to occur.

In periods of slow or even nonexistent economic and population growth, even the most traditional economic analysis cannot explain decisions to build. Having already borrowed large sums for plants under construction, utilities must continue to build or be faced with debts often large enough to create fiscal insolvency. In most states, plants that do not produce cannot be included in the rate base. The remaining defense for building the plants is to decrease dependence on foreign oil, but conservation is a cheaper and more effective way to accomplish that goal and one that citizens are likely to carry out on their own as prices rise.

Like many municipal governments, many private utilities are managing money for short-term solvency while selling out long-term stability. When other future costs like those of nuclear waste disposal, plant decommissioning, rising fuel costs, and increasing environmental safeguards are combined with the costs of capital and compared with the economics of conservation, the increasing availability of user-owned renewable capacity, the eroding of the individual’s real wealth, and the decreased population (in many areas), it is difficult to understand the logic of advocating further investment in massive nuclear capacity. Major financial institutions are increasingly recognizing this, which is why the cost of money is rising for nuclear plants even faster than in the economy as a whole. Ultimately, someone will have to pay for the future costs, and it will be not only the investors in utilities but the public as well.

So electric utilities, once the symbols of American economic strength, are becoming the symbols and victims of the changing environmentclinging to old perceptions despite new realities. Utility planning, once a primary force in directing the economy, is now being driven by forces outside the economy.

PLANNING: AN ALTERNATIVE TO FORECASTING

When an electric utility goes before a public utilities commission for permission to build additional central power station generation capacity, it relies on forecasts of demand to demonstrate the need and validate its requests. Forecasting is a technique for predicting the future and is used by practically all corporate and government planners in the United States as the basis for formulating national, state, and local policy. It consists of a set of techniques of varying degrees of sophistication and detail but all relying fundamentally on the charting and extrapolation of historical trends to predict future conditions.

A “good” forecast is based on actual monitoring of many social variables related to the subject of a forecast over a long period of time and on the construction of equations describing the relationships. A “poor” forecast tracks only one or a few variables for a short period of time. Most utility forecasts are extremely complex and sophisticated mathematical models designed to describe the quantity and characteristics of electrical energy demand for a region. The significance of utility forecasting in shaping society’s future is little understood; and the shortcomings of the methods and assumptions used in forecasting are even less understood.

Many regulators are impressed with the detailed mathematical analysis used in forecast reports. The sheer volume of quantitative material in a forecast lends it a certain scientific credibility with both regulators and the public. Acceptance of the reliability of a utility forecast of demand implies some tacit acceptance of plans for meeting the demand. The character of the supply system, in turn, has a dramatic impact on the future of local, regional, and even national economies (see chap. 2); on the environment; and on the political structure of society. Thus, in an indirect but real way a forecast serves as a plan, but because it is not called a plan many essential responsibilities associated with planning are omitted from the decision-making process.

Two general characteristics of all forecasting must be understood. First, forecasts are constructed on a foundation of values held by the forecaster, the sponsoring institution, the professional community, and the society at large; second, all forecasts, no matter how sophisticated, are based on past patterns of behavior. Both characteristics are discussed below.

Forecasts are constructed on a foundation of value judgments that are almost always implicit. Often the values are not clear, even to the forecaster; sometimes they are intentionally obscured by the masses of data and equations that are used. Each set of data reflects assumptions that led to its selection, and each equation is based on assumptions about behavior, past and future.

Because of the common belief that numbers are inherently objective and because many people are intimidated by advanced mathematics, complex forecasts may actually receive less scrutiny than simple ones. But the values that go into the forecast process very much determine the conclusions that come out. In the end, utility forecasts usually say what forecasters or the employers of forecasters want or expect them to say. Utility models generally support the need for new nuclear or other central station capacities but rarely reflect the need to conserve, decentralize, diversify, or nationalize production. Forecasts to support these latter needs are equally complex, sophisticated, and technically competent, but they are likely to be generated only from models built by individuals whose values and beliefs differ from those of the authors of utility models.

A series of fundamental assumptions are implicit in the demand forecasts used by most utilities, and they should be made explicit. They are:

Economic growth can and should be sustained into the foreseeable future.

A healthy economy requires increasing demand for electricity.

An increased supply of electricity fosters economic growth.

There are sufficient resources to sustain continued economic expansion.

There are no particular ecological limitations to sustained growth.

No particular social or political circumstances will inhibit growth or interrupt supply.

Descriptive mathematical models can be objective.

The future can be predicted by such models with a considerable degree of accuracy.

In addition to these, many other assumptions and values are specific to each forecast or model. The selection of variables to be included in a model is based on assumptions about what is most important, whose data are most accurate, and how far back in time data should be assessed to identify trends and relationships.

The way data are used in the model represents a second layer of value decisions. The equations within the model describe the relationships among the components (or variables). In some cases the equations result from extensive study of the behavior of the variables and their relationships over time; in others the relationships are derived from surveys of “experts” in the field; in yet others they are based on “best guesses” by forecasters. In even the most thoroughly researched examples, values play a role not only in data selection but also in the definition of the relative importance of the variables and in assumptions of causality in the relationships. For example, research may show that over a ten-year period, demand correlated directly with increases in square feet of retail commercial space in a region. Square feet of retail space may then become a reliable indicator of commercial electricity demand. Accepting the relationship, however, requires making assumptions, and the assumptions any individual is willing to make are based on values. Important questions should be asked, for example, “Would the mathematical relationship still be correct if the variables had been plotted and compared twelve, fifteen, or twenty years ago?” “Are the data accurate?” and “Is there any reason to believe that because this relationship held true for ten years it will continue to be true in the future?” The willingness of any forecaster to accept such a relationship as valid is likely to be tied to his predisposition about the outcome.

In many models, variables forecast by in-depth research and sophisticated techniques are used in combination with variables projected by pure guesswork. The research-derived forecasts obscure not only the role of values within them, such as in the example above, but also the more overt value judgments around them. Thus, values are important because they affect the outcome of the forecast; and the forecast, in turn, is used to make policy about the way limited resources are invested. The way they are invested determines (by limiting or encouraging possibilities) directions for the future.

The forecast thus plays a major role in formulating social policy, but the forecaster escapes responsibility for making social choices. As “social scientists,” most forecasters view themselves as students of society rather than interveners. The insidiousness of the forecast-based decision-making process clearly limits social responsibility and social choice, which are crucial to democratic systems.

The second major shortcoming of forecasting stems from its reliance on historical trends. If there is one thing we can say with certainty about the future, it is that it will not resemble the past; and if there is one thing we can learn from the past, it is the probability of the improbable. Yet the only image of the future that can emerge from forecasting is a reflection of the past. In a forecast the improbable always remains improbable. Depending on methods and assumptions, forecasts may differ regarding future rates of change, but alternatives, creativity, chance, and nature are all left out.

Early forecasts were constructed from the linear extension of past trends. Russell Ackoff, chairman of the Department of Social Systems Science at the University of Pennsylvania’s Wharton School, has observed that the only value of linear trend extrapolation is to show what cannot happen. Thus, such trends can be used to demonstrate why change is necessary but not to determine the character of the change. Science has found no continuous linear trends, and social phenomena seem no exception. Events and forces external to the subject of the forecast intervene to modify the behavior of all systems.

The development of more sophisticated econometric models in the 1960s and 1970s made forecasting more sensitive to many influences ignored by simpler techniques. Yet even the most advanced econometric models are at best reflections of the past. As I have already pointed out, even these models are based on economic assumptions about wealth, success, and growth that simply do not account for a host of external factors that can rapidly affect human behavior (such as the demand for electricity).

Though econometric modeling is an improvement over linear projections, events and forces that can enormously influence demand cannot be included if they occur randomly, irrationally, or so infrequently that the pattern of their occurrence cannot be monitored or predicted; or if they are more subtle than our tools can monitor. Such events include:

political changes (embargoes, price manipulations, sabotage, strikes) ecological events (earthquakes, severe weather conditions, and other catastrophes)

absolute limits of resources (and their net energy implications)

changes in values (interest in self-sufficiency, concern about pollution, health, and safety)

technological innovation (advances in alternative technologies).

In defending overly optimistic forecasts in the face of shrinking demand after the first oil embargo in 1974, some utility spokesmen argued that the embargo was an “unpredictable” intervention and therefore could not be fairly used to point out the shortcomings of recent forecasts. Such events may not reflect on the quality of forecasts but they do bring into question their reliability.

In spite of the shortcomings of forecasts, individuals and institutions must allocate some portion of their time to preparing for the future. Forecasters are assigned the responsibility to predict the future so that decision makers can know how to invest resources, to prepare. Some forecasters point to their records as evidence that, at least in the short term, they have often been right. But correctness is only a partially valid defense of the role of forecasting. This is true for two reasons: (1) Because many changes occur slowly over a long period of time, predicting trends can have a reasonable degree of reliability in the short term but none in the long or moderate term. (2) The tendency of all prophecies (especially when made by large institutions with large impacts on society) is to become self-fulfilling. The second tendency is very important.

Society in general, and electric utilities in particular, have finite capital resources to invest in any given activity. When utilities create enormous capital investment programs to construct large fossil and nuclear-fueled central generating capacity, they are shaping the future. Customers must pay a share of the plants whether or not they want or need the electricity that will be generated. Consumption has historically been encouraged by rate structures that reward waste, and alternatives for generation have been discouraged because utilities have controlled and restricted access to the distribution system. Only utilities could be guaranteed a return on investment and could attract government subsidies for generating electricity.

Given these circumstances, the tendency of self-fulfilling prophecies to occur during times of rapid economic growth is easy to see. The forecaster predicts rapid growth, the directors and regulators accept the forecast because it is professional and sophisticated, and finite resources are invested in such a way as to encourage and direct growth. Sometimes the participants have been cognizant of the causal relationship between the forecast and consumption, sometimes not; but regardless, the process is insidious. The tendency of utility forecasts to be self-fulfilling does have its limits (given the factors discussed above), but danger exists when institutions and individuals come to believe in and develop a stake in the pattern of linear development that the tendency fosters. Utilities want the conditions that fostered their growth and success in the past to continue in the future. Relying on forecasts for this reason, in the face of changing ecological and economic conditions, is a major contributor to our energy problem. When used as the basis of policy, forecasts can actually obscure both dangers and opportunities ahead. Forecasts cannot point in new directions; and in a rapidly changing world, overreliance on forecasting can be socially maladaptive.

The differences between forecast-generated plans and a comprehensive planning process lie in the establishment of explicit goals and criteria for measuring progress. A forecast is an effort to predict the future; to tell what is likely to happen. A plan is an effort to affect the future; to determine what a planner would like to happen, and a method for making it happen. A forecast is ostensibly objective; a plan is intentionally prescriptive. In a forecast, the forecaster’s values and goals are usually included only implicitly; in a plan, values and goals are explicit.

In the United States, social planning is often considered contrary to the notions of democratic institutions. In reality, overreliance on forecasting and the lack of a comprehensive planning process are what conflict with democratic ideals by inhibiting choice. A comprehensive plan can include and even encourage diverse (even seemingly conflicting) values, and it can attempt to accommodate unexpected changes.

Until the late 1970s state and federal legislative and regulatory institutions served only to approve or disapprove utility forecasts and plans, never to actively plan or assume responsibility for planning. But the inadequacy of this approach in accommodating resource shortages, rising capital costs, consumer conservation, and other changing factors has given rise to a new conception of utilities and a demand for structural changes in the way we produce and distribute electricity as well as in the institutions that are responsible for it.

The question that consumers and decision makers alike should not ask about the future is, “What is likely to happen?” What must be asked is, “What could and should happen to our electric utility system?” “How do we want it to work?” Emerging from such questions should be a set of specific goals based on our best assessment of possible problems, environmental conditions, and successful adaptation. Such goals should focus on our ability to:

o produce sufficient electricity for future needs

o minimize future demand through comprehensive system planning and user education

o be resistant to breakdown and intervention by as many ecological and political contingencies as possible (i.e., it should be flexible diversified and redundant in both production and distribution)

o employ the minimum necessary overcapacity

o use indigenous and renewable resources as much as possible

o produce the most inexpensive electricity (consistent with the above)

o employ cost accounting that is comprehensive, long range, and consistent for evaluating alternatives

o be as responsive as possible to new technologies and techniques

o rely to the greatest extent possible on the marketplace for the production of electricity

o minimize environmental impacts.

The next section summarizes the characteristics of a system that could address such goals.

POSSIBILITIES: AN EMERGING IMAGE OF UTILITIES

I have tried to demonstrate that strong environmental constraints preclude resolving the problems of America’s electrical utility industry by traditional approaches, but I have also indicated that these constraints do not totally preclude a resolution. The established conception of electric utilities (and their structure) held by utility planners, regulators, and public policy makers is what impedes effective and adaptive responses.

Rapidly changing social, economic, political, and ecological conditions are mandating adaptive structural reform of the electric utility industry. Whether such structural reform is to be accomplished with minimum negative social impact (i.e., with minimum social costs) is a matter of public policy. Delay will raise the costs. Long-term solutions must emerge from a reconsideration of public needs, from a comprehensive assessment of the problems, and from an inventory of all the available technological and policy alternatives.

This book explores the characteristics and components of an alternative approach. Various articles summarize specific characteristics, possibilities, and problems for such a system. I will summarize here the general characteristics in order to create a framework within which the other articles can be understood.

The emerging electrical utility industry will have ten major characteristics:

-

a major role for cogeneration and renewable energy resources

-

more effective utilization of regionally indigenous resources

-

a greatly expanded role for load and end-use management in relation to supply management

-

a greater degree of redundancy in both generation and transmission resulting from reduced economies of scale

-

a greater diversity of resources and technologies for generation

-

the redefinition of a grid from a simple distribution system to an absorber and redistributor of decentralized production

-

a greater dependence on the marketplace to determine the cost effectiveness of various production alternatives

-

a greater degree of institutional separation between the generation and distribution functions of electric utilities

-

the development of an independent service industry to manage decentralized production facilities

-

some expansion of both decentralized and central system storage

A brief introduction to each of these concepts follows.

A Dominant Role for Cogeneration and Renewable Energy Resources

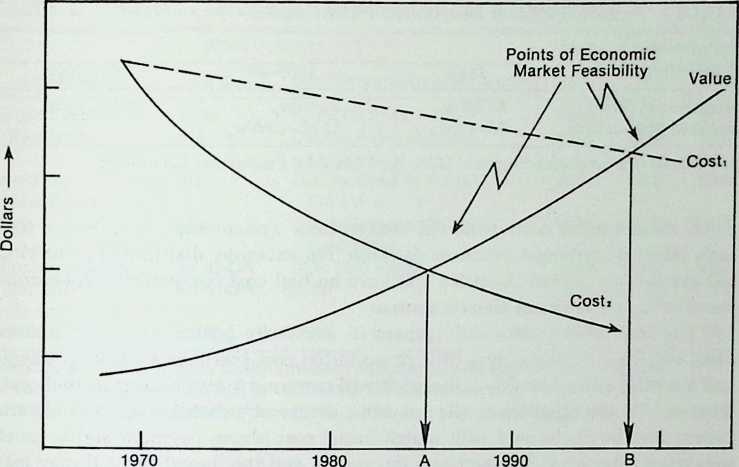

There is heated debate among authorities over how much of future demand can be met by renewable (sustainable and free) energy resources. Utilities have been reluctant to invest in renewable technologies because their reliability in modern electrical grids is largely unproven and because many inherently require small- scale production facilities and, consequently, structural reform to develop. But in the medium-term future, increased use of renewables offers the best practical alternative to rising prices, decreasing net availability, and potential supply disruptions resulting from diminishing reserves of traditional fuels. Further, they offer the hope of increased environmental quality and safety. As safety and environmental costs are increasingly factored into economic assessments, and as prices of traditional fuels rise, the economic attractiveness of alternatives improves.

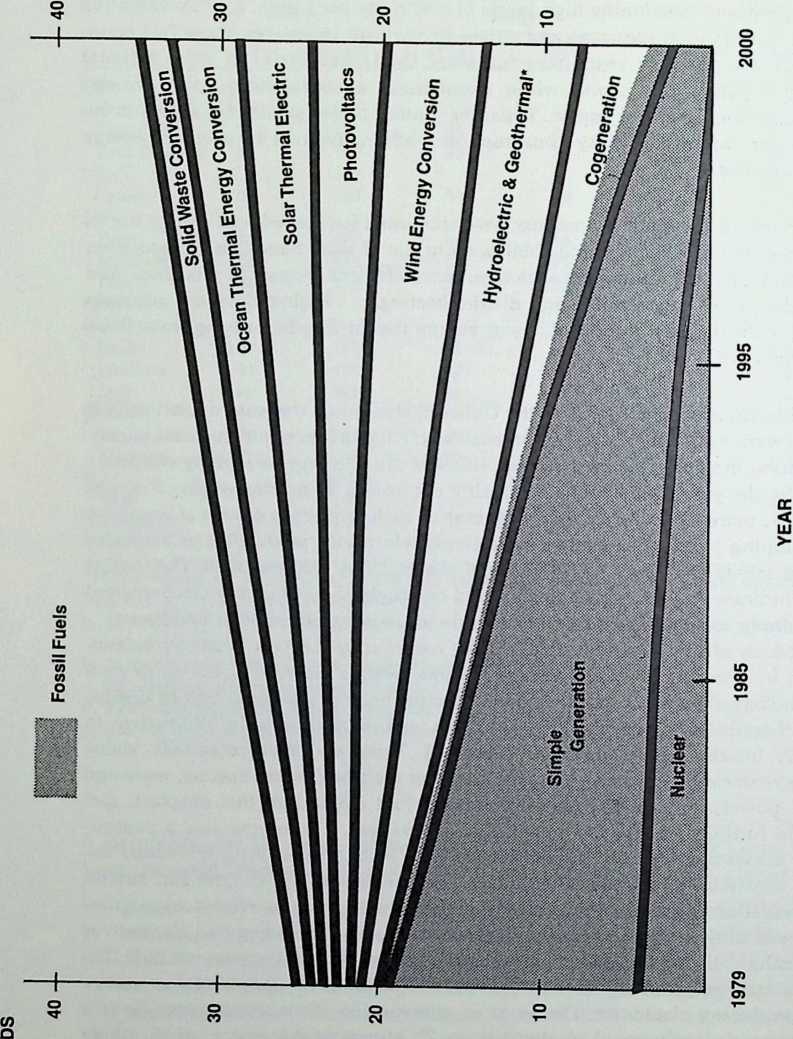

Chapter 4, by Lisa Frantzis, addresses this subject. It summarizes a series of major independent technology assessment studies to indicate the potential of cogeneration and renewables. It concludes, as have numerous subsequent national studies by the Harvard Business School, the Union of Concerned Scientists, the World Game, and the Solar Energy Research Institute, that renewables could play a major role in our electric energy supply. But this conclusion raises a series of more complex questions about how a system must be organized to use renewables efficiently.

More Effective Utilization of Indigenous Resources

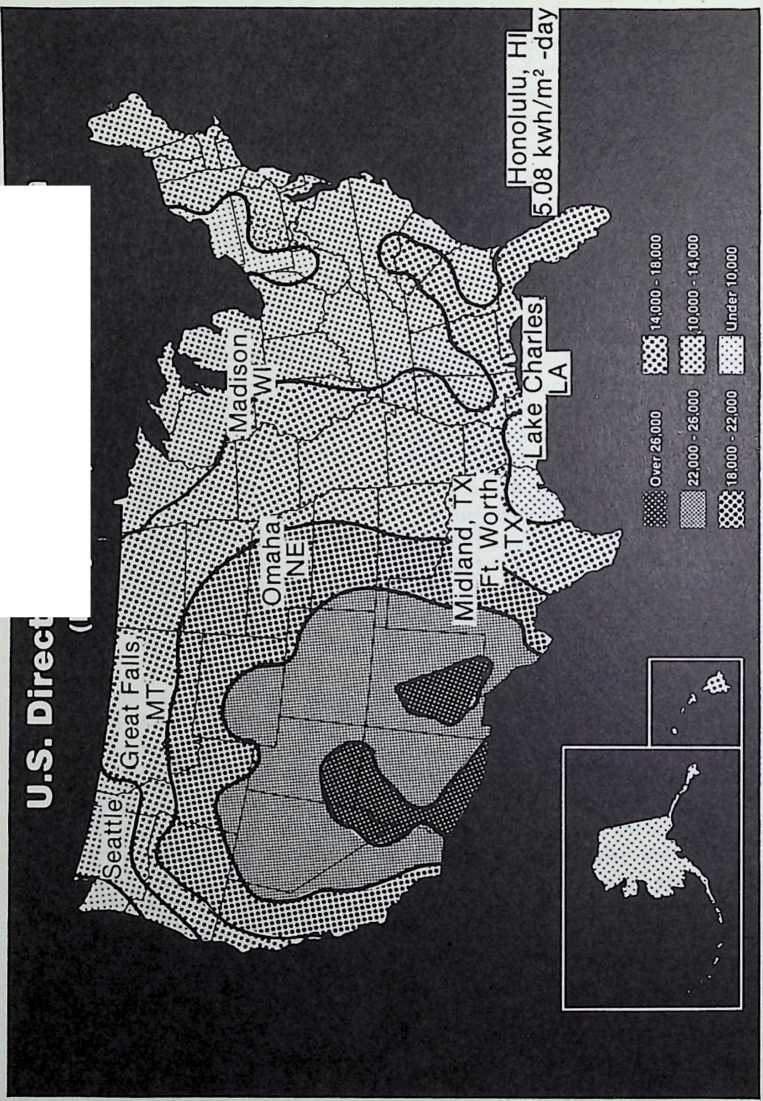

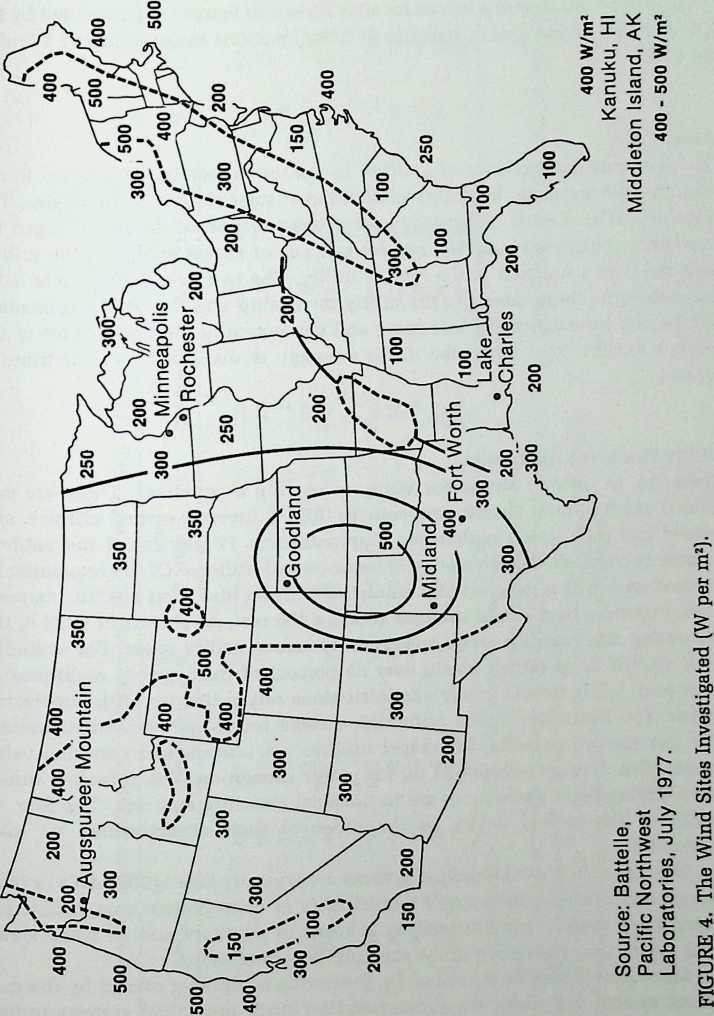

It is important not only that renewable resources be used whenever possible but that those resources that are indigenous to each region be integrated into the system. Resource mappings, such as those used in chapters 4 and 7, indicate that each renewable resource is unevenly distributed geographically but that every region benefits from the availability of some renewables.

Cogeneration (the sequential production of electricity and steam for heat or industrial processes) can be considered an indigenous energy source for the purposes of this discussion. This is because any intensive user of fossil fuels for steam or heat generation is also a potential producer of relatively low-cost electricity. The efficiencies achievable by combining electricity production with such uses are enormous in comparison with separate production. Until recently, only the very largest of industrial users were seen as potential cogeneration sites. Recent advances in cogeneration technology, however, are dramatically reducing the economies of scale. Relatively small-scale systems (under 200 kW) are now being installed throughout the United States at hospitals, universities, public institutions, and at commercial and industrial sites. Very small-scale systems, developed in Israel and in Europe, are now being marketed even for multifamily residential applications. Wastes (municipal, industrial) are other indigenous energy sources discussed in chapter 4. Utilizing indigenous resources increases local self-reliance, reduces transportation costs and energy requirements, and creates economic development (see chaps. 2, 3, and 12).

Expanded Role for End-Use and Load Management

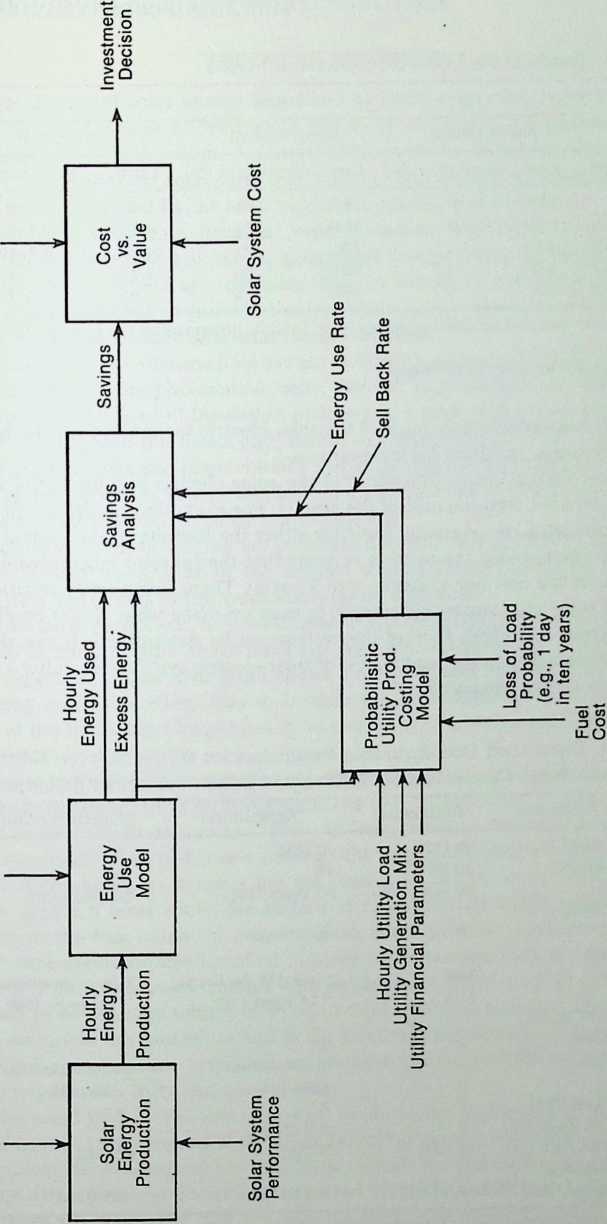

Historically, utilities have planned supply to meet forecasted demand. As supplies diminish and prices rise, managing demand to match available supply becomes a cost-effective activity. This will be true particularly when there is increased reliance on the use of renewable resources because production is more a function of variable conditions than of demand response. The degree of reliability that can be derived from these variable sources is discussed in chapters 5 and 6 and modeled by James Kahn in chapter 8.

As interest in load management has grown, many sophisticated techniques have emerged to control use. They fall into three general categories: (a) those that reduce demand by increasing appliance efficiency and reducing waste; (b) those that direct and control load to make the character of demand curves match the character of supply (i.e., time of day rate, load shedding, interruptable rates, radio control systems); and (c) end-use management (discussed by Lovins in chap. 2). End-use management means matching available energy resource types and characteristics to use requirements and characteristics. Demand reduction and load management represent an entirely new set of planning and management tools that have only begun to be used. They open up new opportunities for increasing reliance on renewable resources.

A Greater Degree of Redundancy in Generation and Transmission

Most renewable resources are distributedthat is, they have relatively lower intensity and are dispersed over large geographic areas, rather than concentrated (as fossil and nuclear fuels are) in relatively few locations. Smaller-scale technologies are required to optimize their conversion to electricity, in comparison with traditional fuels. This means that economies of scale in production must be shifted from the actual generation of electricity to the production of generators. Mass production of small and medium-scale generators has numerous benefits. A large number of small plants means greater employment per unit of energy (see chap. 12), shorter construction lags (see chap. 2), greater resistance to system disruption (it is less likely that one hundred small plants will go out simultaneously than a single large plant), and reduced demand for capital expenditures for generation because of reduced demand for reserve capacity (see chaps. 2, 6, and 8).

It is important to note that increased redundancy and reduced scale of production units mean greater reliability from renewables than can be expected from single renewable units. It is likely that the wind will be blowing somewhere in a service area all of the time but unlikely that it will be blowing in any single location as often. This is true of most decentralized sources and is discussed in detail by Kahn in chapter 8.

Reconceptualizing the Grid as an Energy Absorber and Redistributor Rather than as a Single Distribution System

Reliance on centralized generation requires a grid in which energy flows one way (conceptually if not literally) from producer to consumer. In a grid that utilizes diverse and smaller-scale renewable systems, energy must be understood to flow omnidirectionally. As Sdrensen and Kahn point out (chaps. 6 and 8), the grid itself can serve as an equalizer of the ebbs and peaks of varying production. As the number of small producers (or contributors) to the grid grows, it is essential to understand the impacts. Sorensen argues that between 10 and 20 percent of a grid’s total production can be produced from dispersed and variable sources before the need for storage becomes critical.

Dependence on the Marketplace to Determine the Cost Effectiveness of Various Production Alternatives

The problem of determining which sources and technologies to use to meet demand is a complicated management problem. As the proportion of dispersed to central generation grows, the problem will grow. The question that must be addressed is, “What technology can meet demand most cost-effectively?” As Huettner points out (chap. 9), the question may best be answered by using the marketplace as an analogy. In an emerging new role, the utility would become a broker between buyers and sellers of electricity. With smaller stations, the financial risks and costs of overcapacity to producers and consumers are reduced. Thus it may be easier for utilities to find new capacity in small-scale units. Tax incentives such as energy credits and accelerated depreciation allowances in combination with enabling legislation (see chap. 10) can encourage small-scale production. Huettner discusses this potential extensively in chapter 9. As the cost of capital rises for central capacity generation, and debt threatens the viability of utilities, they may well be increasingly interested in being relieved of their responsibilities and burdens for generation.

A Greater Degree of Institutional Separation between Production and Distribution

Increasing dependence on markets for production necessarily involves independent producers competing to sell as well as potential consumers competing to buy. The utility as broker and distributor would be less and less of a force in production. This approach requires redefinition of the traditional electric utility, but it is not without precedent. In the communications industry a “utility” is a public service industry responsible for distribution of a commodity (information). Such utilities are barred from controlling access to the network by producers or consumers except for the imposition of quality standards to protect the network itself. In the electric utility industry, utilities have historically been licensed and protected not only as the distributors but as the sole producers of the commodity they distribute.

In a marketplace, producers of an inefficient or overly expensive alternative are penalized with lack of sales. Electric utilities, on the other hand, have been rewarded with planning expensive overcapacity by rate increases based on guaranteed return on investment. This problem would be ameliorated by removing the rights and responsibilities from utilities (as Huettner discusses in chap. 11).

The Development of an Independent Service Industry to Manage Decentralized Production

Lindsley points out in chapter 13 that the primary problem with decentralized production is the reliability of individual plants owned by individuals and institutions without expertise in their operation and without capital to maintain parts and service. Service is a labor-intensive operation, and dispersed generation can work only with efficient and well-equipped regional service and management industries. A considerable degree of standardization (not now evident) in equipment design would be required for an economically viable industry over the long haul.

Some Expansion of Both Centralized and Decentralized Storage

Reliability will always be an issue as the percentage of variable production increases (i.e., with the growth of the photovoltaic industry). The need for storage and its characteristics are discussed by Robert Morris in chapter 5. But decisions about storage (how much and what kind) can be made only on the basis of market conditions, the effectiveness of load management, and the character of supply.

To a large degree, decentralized storage can be thought of as a form of load and end-use management. Wind may be better suited as a tool for pumping water and pressurizing air to displace electric generation because water and air are storage mediums. In such instances, the windmill serves as a conservation rather than a production system and variability is not a problem in such uses. Central storage of energy as hydrogen or pumped water are also options, but storage should always be considered a last resort in grid management because of its high costs.

The kinds of changes discussed in this book for our electric utility infrastructure will not come overnight, nor is it likely that the changes will be as clear-cut as suggested by this brief characterization. The ten characteristics discussed above are presented as directions in which change could take place. Many of the ideas, radical when this book was conceived, are now at least partially accepted in the industry. Small-scale, decentralized production is, under the federal and state guidelines discussed by Thompson in chapter 10, now growing rapidly, although its contribution is still relatively small.

NOTE

1. Howard Odum, “Energy, Ecology, and Economics,” Ambio, vol. 2, no. 6, pp. 220- 27 (Swedish Academy of Science, 1973).

AMORY B. LOVINS

I

¶ Technology Is the Answer!

11 (But What Was the Question?)

Amory Lovins presents a critical review of the electrical generation industry and of the concepts that currently guide decision-making in it. His chapter, written in 1978, is a personal observation in the sense that Lovins does not include in it all the details and documentation that he has presented to support his observations elsewhere. He concentrates on casting a shadow of doubt over the dominant assumptions underlying policy development in the power production agencies, private and public. Regardless of whether we agree with Lovins’s proposals, the article is an excellent introduction to an alternative view of the inadequacies of the present electrical generation system. Lovins’s years of research and analysis, carefully documented in other papers, show themselves clearly in this plea for a saner and more useful electrical generation system.

The energy problem is both important in its own right and useful as an integrating principle for examining a wide range of related resource and social problems. I am not concerned here primarily with the technical features of various energy technologies, however seductive, but rather with their appropriateness, their fitness for specific tasks; and having studied them from various perspectives, I shall conclude that what I call “hard” energy technologies are, in Marvin Goldberger’s memorable phrase, spherically senselessthat is, they make no sense no matter how you look at them.

While not under the illusion that facts are separable from values, I attempt in this critique to separate my personal preferences from my analytic assumptions, and to rely not on modes of discourse that might be viewed as overtly ideological but rather on classical arguments of economic and engineering efficiency and of orthodox political economy (arguments which are only tacitly ideological). The residual disagreements to which the results may give rise are

Copyright © 1983 by Amory B. Lovins. All rights reserved.

in general due to transscientific differences that cannot be resolved on technical merits. Such disputes, often masquerading in technical guise, dominate the energy debate, and failure to recognize them for what they are causes no end of confusion. Nor do I wish to imply, by emphasizing economic arguments, that I consider them dispositive or even especially important. A dispassionate analysis of how we actually make major public policy decisions about energy would reveal that we decide on grounds of political expedience and then juggle the subsidies to make the economics come out to justify what we just did. Nonetheless it is a sound tactic to use one’s opponents’ data and criteria so that those who prefer to count only what is readily countable (private internal costs) will find their accustomed analytic methods, if not their conclusions, given gratifying emphasis here.

Though this is essentially a critique of traditional, currently dominant approaches to the energy problem, I have taken the liberty of adding at the end a very brief sketch of a “soft” energy path that appears to be more justifiable and more likely to succeed.

HARD ENERGY PATHS

The traditional energy policy of Strength through Exhaustion converting increasingly elusive fossil and nuclear fuels into rapidly growing amounts of premium energy forms (fluid fuels and, especially, electricity) in ever more complex and centralized plantsis inappropriate because, most fundamentally, the tasks for which it was to be appropriate were left undefined. The energy problem was thought to be how to expand secure, affordable, and (preferably) domestic energy supplies to meet extrapolated homogeneous demands. Demand was treated as an aggregate figure (so many quads in 1984) without regard to the most effective type or scale of energy for each end-use task. The result, extravagantly, was a highest common denominator, an array of costly and elaborate trip-hammers capable of cracking any conceivable nut.

More specifically, the chain of argument underlying this approach runs something like this:

-

To meet our social goals (however unspecified or platitudinous) we need

-

rapid undifferentiated economic growth, which requires

-

more or less correspondingly rapid growth in primary energy use, so

-

we rapidly run out of (that is, encounter increasing economic, geopolitical, or ultimately geological difficulties in obtaining) oil and gas, so

-

we must switch to the more abundant solid fuels (coal and uranium), but

-

direct use of coal is not generally feasible or convenient, so

-

we must burn the solid fuels in power stations (and perhaps, ultimately, in coal-synthetics plants), and because of

-

economies of scale

-

we need the power stations to be big, so

-

the only question is which kind of big electric plant to build, and the canonical answer is

-

nuclear (and perhaps coal-fired)built rapidly and profusely.

Embarrassing questions, raised with increasing force and frequency, are perforating this hermetic argument. For example:

-

With respect to economic growth, what is growing? What has it to do with welfare? Is its net marginal utility positive? How do we know? How long will it stay that way? If our welfare derives from material rather than from cultural or spiritual things (a bad approximation), should we not try to maintain the maximum stock of physical artifacts with the minimum throughput of resources and effort, and if so, is not most of the GNP something we should try to minimize rather than maximize?

-

What is the link between (2) and (3), since we now know that by practical, economically attractive, and purely technical measures we can double by about the turn of the century, roughly redouble by 2025, and further increase thereafter the amount of work wrung from each unit of delivered energyi.e., that within very broad limits energy and economy can be decoupled?

- How can we afford the power stations on a truly large scale (large enough to substitute nationally for oil and gas), since central-electric systems are two orders of magnitude more capital-intensive than historic direct-fuel systems and have a very unfavorable cash flow to boot? How can we realistically expect electricity to penetrate the markets accounting for most of our delivered energy needsheat (58 percent) and portable liquid fuels for vehicles (34 percent)in view of (a) its cost (new electricity costs more than a hundred dollars a barrel on a heatequivalent basis, or several times the present OPEC oil price) and (b) the formidable rate and magnitude problems of the complex, slow-to-deploy electric systems? (Supplying with nuclear power a quarter of the lowest government projections of United States energy needs in 2000assuming that each unit of electricity replaces two units of fossil fuel throughout the economy would require us to order a 1,000-MW station, starting now, every 4.7 days. This would require more investment than we now put into all industry. Further, since nuclear power, even in principle, can readily displace only baseload electricitya small fraction of all our energy, and oil, uses it cannot do much for oil dependence: replacing overnight with nuclear power every oil-fired power station, both thermal and gas-turbine, throughout member countries of OECD [Organization for Economic Cooperation and Development] would reduce 1975 OECD oil consumption by only 12 percent and would reduce the fraction of consumption that is imported from 65 percent to 60 percent.)

11. Is this result politically, geopolitically, environmentally, and economically acceptable? To whom? Is that enough consensus to proceed on (cf. Vietnam)?

But it is not my purpose here to canvass these interesting arguments, or the more technical ones concerned with (5) and (6). Rather, I shall concentrate on (8-10), considering

-

countervailing diseconomies of scale,

-

structural problems of central electrification, and

-

our ability to make political decisions of this kind.

Diseconomies of Large Scale

Real economies of scale frequently occur in construction. These are often said to follow a scaling law such that increasing unit size by a factor X increases cost by a factor only Xn, where n is of order 2/3. (In practice the observed economies are often lower, with n approaching unity in some cases.) But there are also countervailing diseconomies of large scale that have seldom been properly taken into account, often because they are outside the system boundary represented by X. They include:

[Distribution costs.]{.smallcaps} If we make an energy device, such as a power station, refinery, or gas plant, bigger and more centralized, we must pay for a bigger distribution system to spread the energy out again to dispersed users. In the United States we have gotten to the point, with electricity in 1972 and gas in 1977, where an average residential customer was paying about 30 cents of each utilitybill dollar to buy energy and the other 70 cents to get it delivered. That is a diseconomy of centralization. (Utilities tend not to notice it because they take incremental distribution costs as given and seek the cheapest source of bulk electricity to feed into the “preexisting” grideven though, in practice, minimizing busbar cost may maximize delivered price.)

[Distribution losses.]{.smallcaps} These are generally of the order of a tenth of throughput, but they are pervasive.

Loss [of opportunities for mass production.]{.smallcaps} If we could make power stations the way we make cars, they would cost at least an order of magnitude less than they do, but we can’t because they’re too big.

Loss [of opportunities for integration.]{.smallcaps} Total-energy systems (for example, cogeneration, combined heat and power stations) and integration with, for example, food and water systems can save a great deal of money but are generally impracticable at the scale of modern central energy facilities.

[Unreliability.]{.smallcaps} Big plants (notably power stations) tend to be less reliable than smaller ones, for excellent technical reasons that are not likely to go away. (For example, a 500-MW boiler has about ten times as many miles of tubing as a 50-MW boiler, so it will fail more often unless quality control improves tenfold; physically larger turbines have larger blade-root stress and hence require more exotic alloys more likely to have unexpected properties.)

[Larger reserve-margin requirements.]{.smallcaps} Failure in a 1,000-MW power station is embarrassing, like having an elephant die in the drawing room, and requires a second elephant standing by to haul the carcass away (1,000 MW of backup capacity). This is expensive. More numerous smaller stations would be unlikely to fail all at the same time and hence would need less reserve margin: in practice, changing unit size from 1,000 MW to a few hundred MW would provide the same level and reliability of service with about a third less new capacity, and 10-MW units at the substation could save over 60 percent at the margin. With realistic assumptions about reserve requirements and unreliability as a function of size, reserve requirements can change very roughly as the square of changes in unit size. With each big new station costing several billion dollars, the incentives to save a third or more of that capacity are high.

[Higher indirect costs.]{.smallcaps} There is some empirical evidence that installing a kW(e) in a small and supposedly uneconomic thermal power station can actually cost less capital than installing a kW(e) in a very large station. This is probably because the small station is so much faster to build that it greatly reduces exposure to interest payments, cost escalation, changes in regulatory requirements during construction, and the risk of premature completion.

Loss [of diversity.]{.smallcaps} Big units make it possible to make truly large mistakes at high social and economic risks. Long lead times (tempting one to compress development and scale-up schedules) and technical adventurousness compound the risk of large-scale technical failure. While small systems adapted to particular niches can mimic the strategy of ecosystem development, adapting and hybridizing in constant coevolution with a broad front of technical and social change, large systems tend to evolve in a more linear fashion, like single specialized species (dinosaurs?), with less genotypic diversity and greater phenotypic fragility. Adaptation is further constrained by the accretion of a costly and inflexible infrastructure.

[Inability to distinguish among users.]{.smallcaps} People who use electricity for heating water and would not even know if it went off for a few hours must pay the high premium for the reliability required by elevators, subways, and hospital operating theaters. For the former group this is a large diseconomy.

[Technical thrust toward inflexible design criteria.]{.smallcaps} For example, it is not obvious that a future electric grid operating on dispersed renewable sources (hydro, microhydro, wind, photovoltaics, solar heat engines) will need or be able to justify high standards of frequency stability and phase coherence: with little rotating machinery, it may be worth making a cheaper, sloppier grid. (Frequency stability is, very roughly, five times as good in the United States as in Western Europe, and five times as good there as in Eastern Europe.) Customers who want to use their power supply as a clock could instead use local oscillators (now very cheap) or radio markers like WWV (a National Bureau of Standards shortwave broadcast station providing continuous, precise time and frequency reference signals). In contrast, some new steam turbines now being ordered are so inflexible that they blow up if their operating frequency deviates by a rather small fraction of one percent. Installing such devices locks us, for many decades hence, into very costly and perhaps unnecessarily stringent operating criteria.

[Vulnerability.]{.smallcaps} Big units increase the tendency of central electric systems (and also nonelectric analogs) to be vulnerable to disruption, whether by accident or malice. In a centralized grid a few people can turn off most or all of a country, whereas dispersed sources, while benefiting from user diversity on the grid, would not be dragged down if one or two sources failed: in many instances local users could still continue to use local sources decoupled from the grid. Central electric systems can be designed for high technical reliability in the face of calculable failures, and are (at a high marginal cost), but they tend not to be resilient in the face of incalculable (but numerous and important) surprises.

[Increased local social and environmental stress.]{.smallcaps} This makes site licensing more difficult, so utilities seek to maximize installed capacity per site, so the plant is a worse neighbor than it would otherwise have been, so the political reaction raises transaction costs for the next site, and so on exponentially. We are well into this loop. (It can be argued that more dispersed sources are harder to submit to environmental controls. European experience contradicts this. Smaller units can often use inherently cleaner technologies, be integrated into total-energy systems that minimize fuel burned per unit function delivered, and, since they are sited amongst their users, must be and are built and run properly whereas central, remotely sited units often have the political clout to alter or ignore environmental controls.)

[Higher complexity.]{.smallcaps} Hence longer downtime, more difficult repairs, higher training and equipment costs for maintenance, higher carrying charges on costly spare parts made in small production runs, etc. Management also may become more complex, with high fixed charges encouraging haste and corner-cutting. (Ten percent annual interest on a billion dollars is over three dollars per second.) Technical problems, interacting with environmental, social, economic, and political problems, are often unforeseen, especially in a world of lags, nonlinearities, threshold effects, irreversibilities, electoral cycles, and other peculiarities unlikely to be foreseen by plant designers and operators. These surprises tend to promote the already dangerous increase in the likelihood and consequences of mistakes.

Another category of structural problems associated with centralization, especially (for illustration) in electrical systems, often manifests itself in higher costs:

[Centralization and autarchy.]{.smallcaps} Allocating enormous amounts of scarce resources to such a demanding enterprise in the face of competing claims, especially when the market is unwilling to do so, requires a strong central authority an Energy Security Corporation (to evade market forces) and an Energy Mobilization Board (to evade democratic forces). Big, complex energy systems require big, complex bureaucracies to run them and to say who can have how much energy at what price. The macroeconomic side-effects of extraordinary capital intensity (for example, inflation, unemployment, high interest rates) elicit further central management, chiefly by distortion (for example, further subsidies), taking ever more bizarre forms in an effort to protect a sector too big to allow to crash.

[Encouragement of oligopoly.]{.smallcaps} Small business can’t make big machines.

[Irrelevance to the needs of most of the people in the world.]{.smallcaps} Big, elaborate electrified systems are probably the least sound energy investment for developing countries, though elites often desire them for prestigelargely because of the bad example set by the industrialized countries. In my view, for example, the greatest contribution of United States nuclear reactors to the global problem of nuclear bomb proliferation is undoubtedly the example they set, whereby all countries feel entitled to similar reactors and the materials associated with theman entitlement affirmed by every president since Eisenhower. It is unconscionable for this country to rely on an energy source we decry as proliferative elsewherealbeit hypocritically, in view of our own reliance on nuclear bombsand we cannot expect that if we, with our fossil fuels, money, and skills, continue with nuclear power, we will see restraint from other countries lacking these advantages. But that is another paper. It is noteworthy that most electrical equipment vendors are so oriented toward large-scale OECD markets that they are unable or unwilling to supply small units for developing countries, which must therefore buy large units and bear the consequencessuch as the blackout in Thailand on 18 March 1978, in which 33 million people lost power for up to 9 hours in Bangkok and 56 other provinces owing to the failure of one l,300-MW(e) plant in a 2,500-MW(e) grid. In contrast, cooperative marketing would be a natural and mutually beneficial trend for smaller systems, especially those suited to rural development (photovoltaics, wind power, solar heat engines, etc.).

[Allocation of costs and benefits to different groups of people at opposite ends of the distribution system.]{.smallcaps} This produces strong interregional conflict.

[Centrifugal politics.]{.smallcaps} Central siting and regulatory authority versus local autonomy is already a potent constraint on energy expansion and is threatening severe strains in the federal (and state/Iocal) system here and abroad.

[Engineering inefficiency.]{.smallcaps} Electricity often cannot in practice be used to its theoretical thermodynamic advantage. Owing largely to proposed electrification, more than half the projected primary energy growth in most industrial countries would be lost in conversion and distribution before it ever got to final users.

[Implications for technologists.]{.smallcaps} With technologies requiring many years’ effort in big, anonymous research teams, personal responsibility and initiative are diminished and may even slip through the cracks altogether. Powerful promotional constituencies develop and take on a life of their own. Further, as Freeman Dyson points out, big technologies are less fun to do and too big to play with, so technologists may not be as innovative as with smaller things that lend themselves to tinkering. Indeed, because soft energy technologies are so accessible that one person, even without much technical training, can make an important contribution to them, they can profit fully from human diversity. There is, as far as we know, nothing in the universe as powerful as four billion minds wrapping around a problem. That is the source of the extraordinarily rapid progress we are now seeing in soft technologies.

Finally, and blending with earlier categories, some special problems of political economy beset centralized (especially electric) systems and tend to raise their economic costs.

[Incomprehensibility.]{.smallcaps} In what Rob Socolow (in Patient Earth [New York: Holt, Rinehart, and Winston], 1971) has called “consumer humiliation,” users are compelled to depend on systems they cannot understand, modify, repair, or control. They are then told that it is desirable for them to stop worrying and leave it all to the experts; but occasional reminders that experts are fallible leave a sense of unease and, for some, alienation. Some people like to understand their own systems, and most, I think, suspect that systems allegedly too complex for ordinary people to make up their own minds about are systems which a democracy ought not to have, for they remove any chance of accountability and substitute for the democratic process a sort of . . .

[Elitist technocracy.]{.smallcaps} “We the experts” replaces “we the people” gratifying for the experts but likely to lead to a loss of legitimacy, a dangerous and hard- to-reverse trend that rubs off elsewhere.

[Inequitable access.]{.smallcaps} Remote siting, which unloads social costs on politically weak agrarians (Navajos, Wyoming ranchers, Montanans, Alaskans) to provide energy to politically strong slurbians (Los Angelanos), is bad enough; worse are technologies so arcane, complex, large, or costly that only wealthy people or large corporations stand much chance of benefiting from them. (This is especially a problem in developing countries: if a new power station is built in India, for example, roughly 80 percent of its output tends to go to urban industry, 10 percent to rich urban households, and 10 percent to villagesand in the end 1 percent might end up helping the poor people for whom it was ostensibly intended. Electricity from any source is costly and hence unlikely to reach those people some two billion at leastwho are outside the market system and have neither an electric outlet nor anything to plug into it.)